2025

Embodied Representation Alignment with Mirror Neurons

Wentao Zhu, Zhining Zhang, ..., Yizhou Wang

International Conference on Computer Vision (ICCV) , 2025

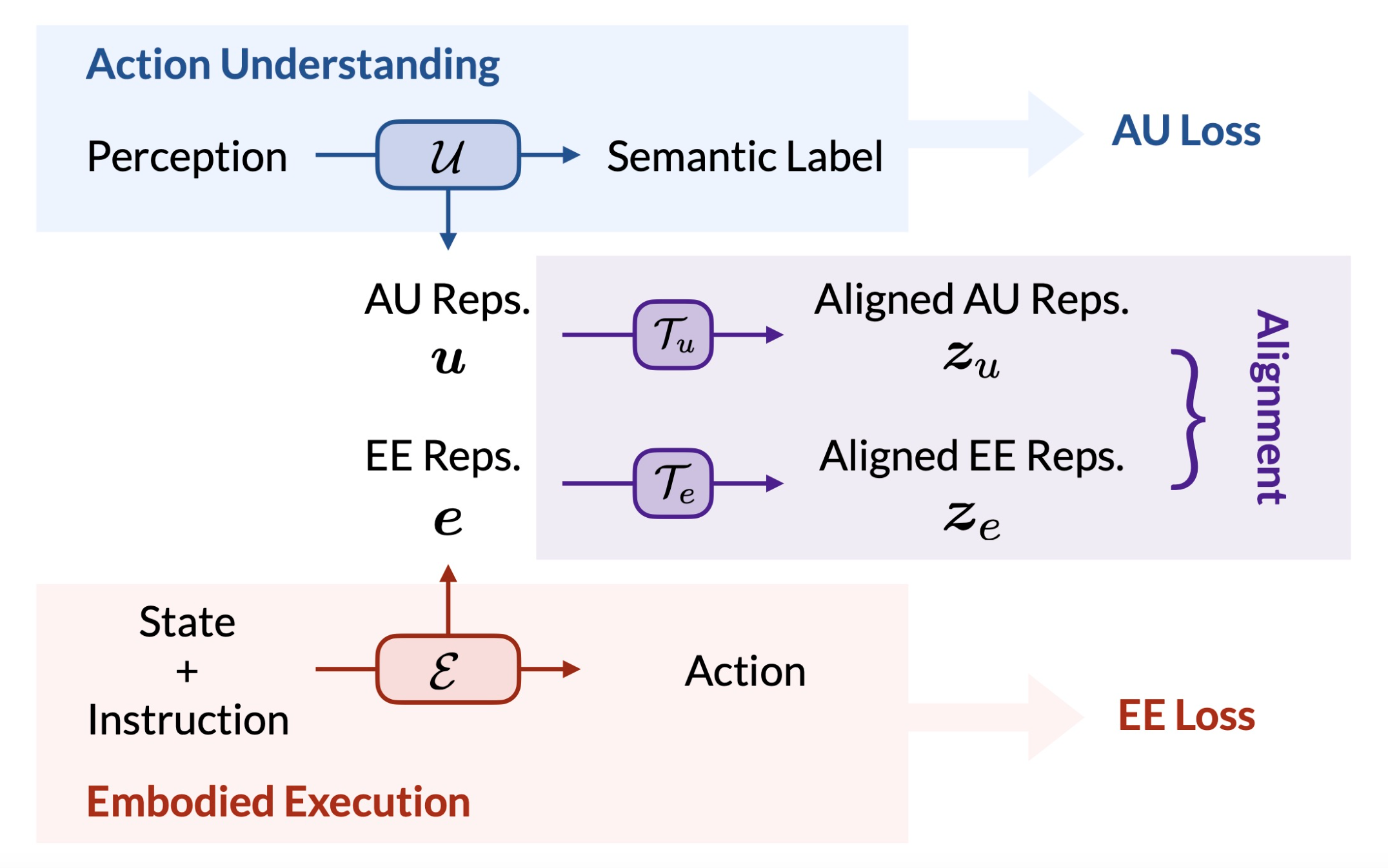

We bridge visual perception and embodied execution from the perspective of mirror neurons.

Embodied Representation Alignment with Mirror Neurons

Wentao Zhu, Zhining Zhang, ..., Yizhou Wang

International Conference on Computer Vision (ICCV) , 2025

We bridge visual perception and embodied execution from the perspective of mirror neurons.

AutoToM: Scaling Model-based Mental Inference via Automated Agent Modeling

Zhining Zhang*, Chuanyang Jin*, Mung Yao Jia*, Shunchi Zhang*, Tianmin Shu (* equal contribution)

Spotlight, Annual Conference on Neural Information Processing Systems (NeurIPS), 2025

We introduce AutoToM, an automated agent modeling method for scalable, robust, and interpretable mental inference. Leveraging an LLM as the backend, AutoToM combines the robustness of Bayesian models and the open-endedness of Language models, offering a scalable and interpretable approach to machine ToM.

AutoToM: Scaling Model-based Mental Inference via Automated Agent Modeling

Zhining Zhang*, Chuanyang Jin*, Mung Yao Jia*, Shunchi Zhang*, Tianmin Shu (* equal contribution)

Spotlight, Annual Conference on Neural Information Processing Systems (NeurIPS), 2025

We introduce AutoToM, an automated agent modeling method for scalable, robust, and interpretable mental inference. Leveraging an LLM as the backend, AutoToM combines the robustness of Bayesian models and the open-endedness of Language models, offering a scalable and interpretable approach to machine ToM.

2024

Language models represent beliefs of self and others

Wentao Zhu, Zhining Zhang, Yizhou Wang

International Conference on Machine Learning (ICML) , 2024

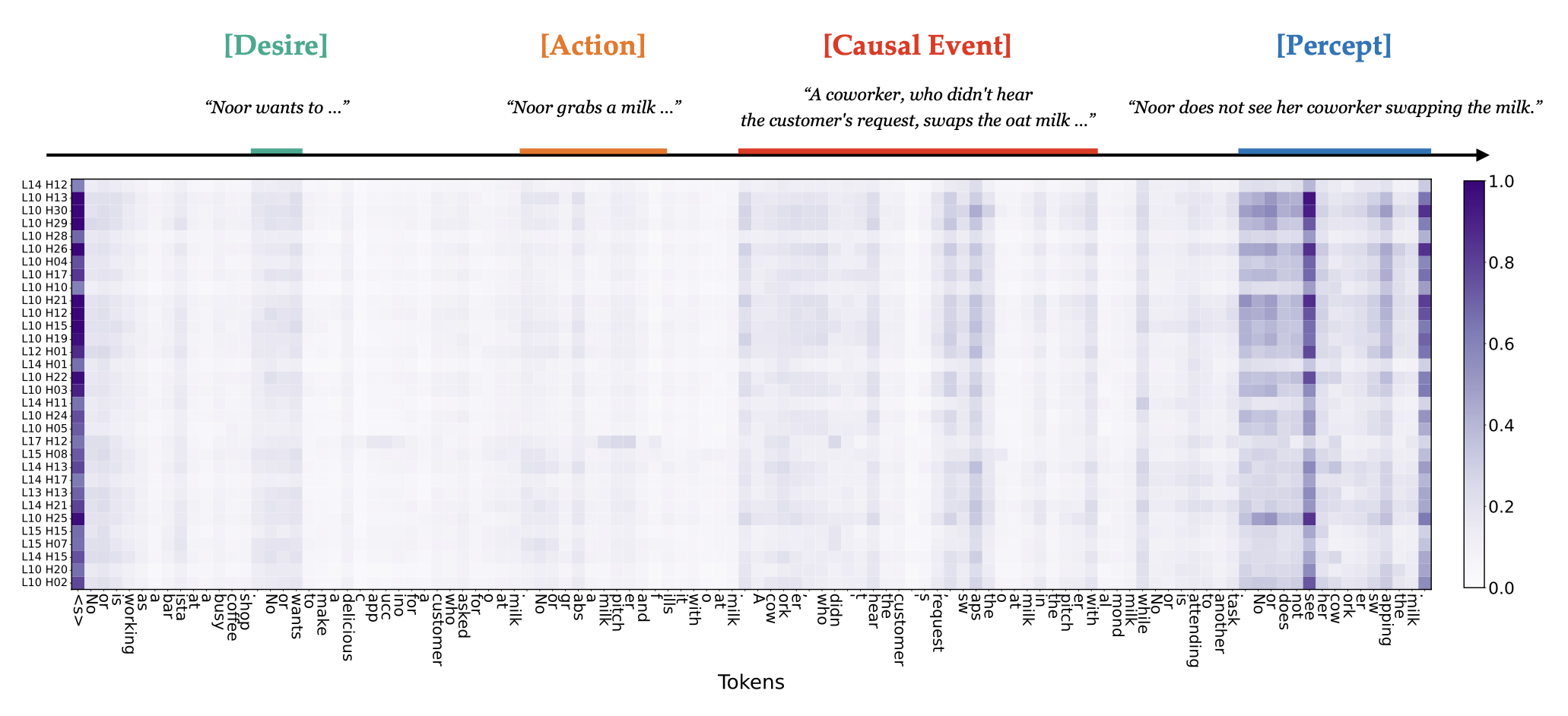

We investigate belief representations in LMs: we discover that the belief status of characters in a story is linearly decodable from LM activations. We further propose a way to manipulate LMs through the activations to enhance their Theory of Mind performance.

Language models represent beliefs of self and others

Wentao Zhu, Zhining Zhang, Yizhou Wang

International Conference on Machine Learning (ICML) , 2024

We investigate belief representations in LMs: we discover that the belief status of characters in a story is linearly decodable from LM activations. We further propose a way to manipulate LMs through the activations to enhance their Theory of Mind performance.