Hi! I am a fourth-year undergrad in the school of EECS, Peking University.

I worked on the intersection of cognitive science and language models. I aspire to achieve human-level intelligence in machines.

I am fortunate to have worked with Professors Heng Ji, Tianmin Shu and Yizhou Wang. I am also deeply greatful to my amazing mentors, Wentao Zhu and Chi Han, for their invaluable guidance and support along the way.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Experience

-

Peking UniversityB.S. in Computer ScienceSep. 2022 - Present

Peking UniversityB.S. in Computer ScienceSep. 2022 - Present -

Johns Hopkins UniversityVisiting StudentJune. 2024 - Sep. 2024

Johns Hopkins UniversityVisiting StudentJune. 2024 - Sep. 2024

Honors & Awards

-

May Fourth Scholarship, Highest-level Scholarship @ Peking University2025

-

Merit Student, Peking University2025

-

Zhi-Class Scholarship2024

-

Peking University Scholarship2023

-

Award for Scientific Research, Peking University2023

News

‘AutoToM: Scaling Model-based Mental Inference via Automated Agent Modeling’ will be presented at NeurIPS 2025 as a Spotlight!

‘Embodied Representation Alignment with Mirror Neurons’ is presented at ICCV 2025!

‘Language Models Represent Belief of Self and Others’ is presented at ICML 2024

Publications - Not able to set up "Selected Pubs" yet :-) (view all )

AutoToM: Scaling Model-based Mental Inference via Automated Agent Modeling

Zhining Zhang*, Chuanyang Jin*, Mung Yao Jia*, Shunchi Zhang*, Tianmin Shu (* equal contribution)

Spotlight, Annual Conference on Neural Information Processing Systems (NeurIPS), 2025

We introduce AutoToM, an automated agent modeling method for scalable, robust, and interpretable mental inference. Leveraging an LLM as the backend, AutoToM combines the robustness of Bayesian models and the open-endedness of Language models, offering a scalable and interpretable approach to machine ToM.

AutoToM: Scaling Model-based Mental Inference via Automated Agent Modeling

Zhining Zhang*, Chuanyang Jin*, Mung Yao Jia*, Shunchi Zhang*, Tianmin Shu (* equal contribution)

Spotlight, Annual Conference on Neural Information Processing Systems (NeurIPS), 2025

We introduce AutoToM, an automated agent modeling method for scalable, robust, and interpretable mental inference. Leveraging an LLM as the backend, AutoToM combines the robustness of Bayesian models and the open-endedness of Language models, offering a scalable and interpretable approach to machine ToM.

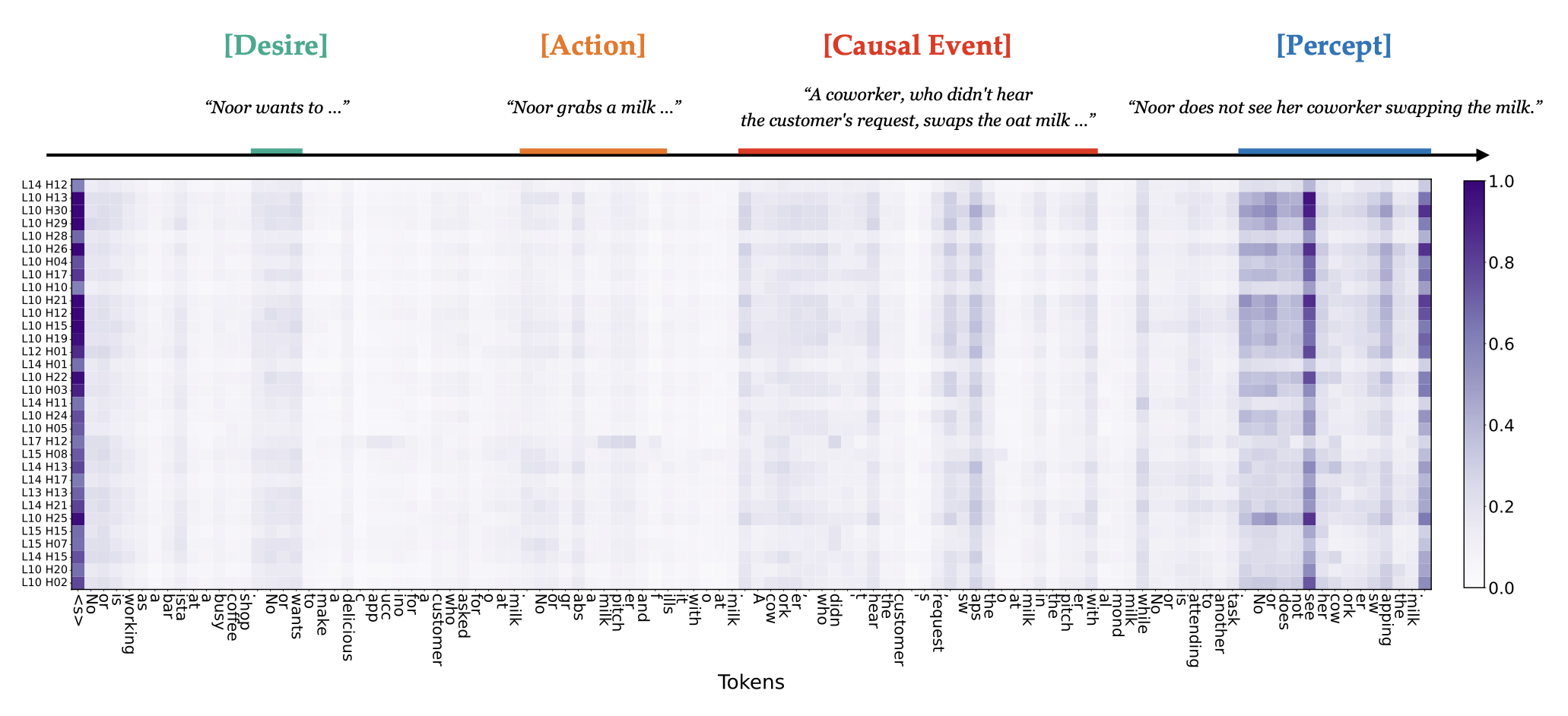

Language models represent beliefs of self and others

Wentao Zhu, Zhining Zhang, Yizhou Wang

International Conference on Machine Learning (ICML) , 2024

We investigate belief representations in LMs: we discover that the belief status of characters in a story is linearly decodable from LM activations. We further propose a way to manipulate LMs through the activations to enhance their Theory of Mind performance.

Language models represent beliefs of self and others

Wentao Zhu, Zhining Zhang, Yizhou Wang

International Conference on Machine Learning (ICML) , 2024

We investigate belief representations in LMs: we discover that the belief status of characters in a story is linearly decodable from LM activations. We further propose a way to manipulate LMs through the activations to enhance their Theory of Mind performance.